Linklog - Feb 2015

Intro

As it generally takes a lot of time to write a proper blog, I have decided to give up to my laziness and start posting more often by simply sharing links to things I found during the week / month. It will also help me keeping track of links that find interesting.

Syncing automatically files using Rsync and iotifywatch / fswatch

Although Emacs has the power to connect directly to servers through SSH, I do like to have my code locally first and them uploaded to a server, for example to run a Hadoop job or some machine learning algorithm. For that Rsync is really nice and I initially wrote a small function that I assigned to a keyboard shortcut:

(defun sync-sentency () (interactive) (shrink-window-if-larger-than-buffer) (shell-command "cd ~/development ; rsync --exclude='lib/stanford-ner' --exclude=files -az --progress sentency vmx:sentency & " )) (global-set-key (kbd "C-c C-y ") 'sync-sentency)

But then I grew tired or hitting that command always so I did a bit a research and found a couple of commands: fswatch and inotifywait.

The first is available for many *NIX systems. Below a couple of interesting links and scripts:

- http://stackoverflow.com/questions/1515730/is-there-a-command-like-watch-or-inotifywait-on-the-mac

- https://gist.github.com/senko/1154509

I ended up modifying a existing project to adapt it to my needs and you can find the code here. It basically check for a change on a file and uploads it accordingly using rsync. So no more hitting shortcuts. https://github.com/mfcabrera/fswatch-rsync

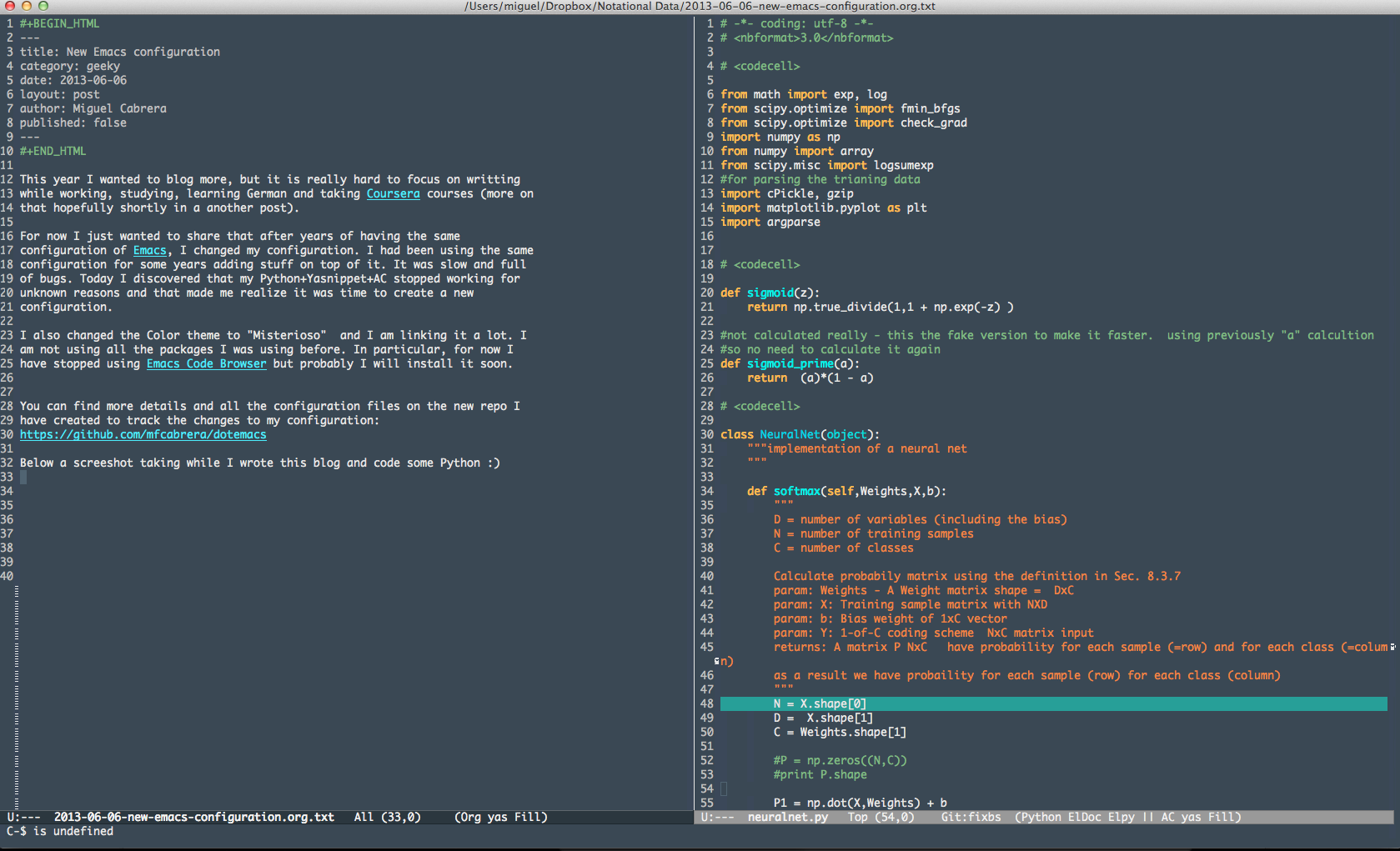

Elpy, PEP8 and friends

I thought my coding style in Python was pretty good. Then I started using Pep8 and friends to check my code and I realized I sucked big time. Good thing is that thanks to modes like Elpy (Nice Python mode for Mac) I can check my code while I write, and I can learn about the proper style. Hopefully that will help me become a better Pythonista (or Pythoneer?). A nice tutorial can be found here.

Scratch Programming Language

Github aliases

Although for Git I use the super cool Magit (mode for Emacs), sometimes I like to use the the command line directly. I started checking At TrustYou we use Git, although not as professionally as we would like. I found a nice post featuring a lot of useful Github aliases from one of the developers of Github: http://haacked.com/archive/2014/07/28/github-flow-aliases/

Working for Open Data Notebooks

While looking on information on how to integrate JS and IPython Notebook I stumbled upon the files for the class Working for Open Data. There are no videos of the class but the slides, the readings and the IPython notebook are really nice.

Share You Stack

Interesting projects where you share your technology stack: http://stackshare.io/stacks

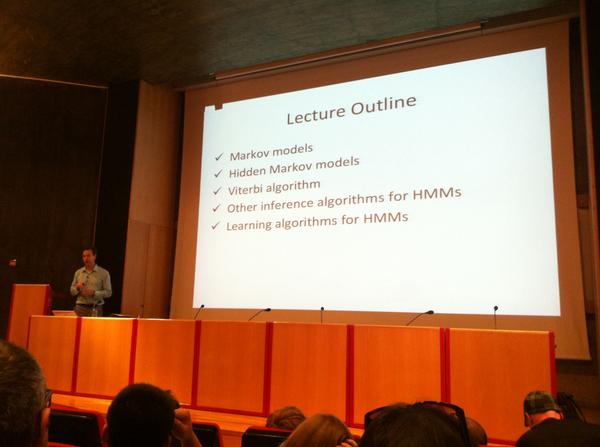

MOOCS

Some MOOCs I am trying to follow (in that order):

- Mining of Massive Datasets

- Statistical Learning

- Introduction to Probability - The Science of Uncertainty

- Introduction to Ableton Live

There are some MOOCs running that although I find them really interesting, I have no time to follow:

I actually took the last one, but I did not complete all homeworks, really nice overview of music production.

Upcoming MOOCS that I find attractive and I am going to try to follow: